はじめに

こんにちは。SCSKのふくちーぬです。

今回は、Network Load Balancer(NLB)アクセスログをCloudWatch Logsに自動転送する方法をご紹介します。

Network Load Balancer(NLB)のアクセスログは、リスナーがTLSの時のみS3を宛先としてログ保管することができます。一方他の多くのサービスではCloudWatch Logsへの転送をサポートしているため、CloudWatch Logsへログの集約をしている方が多いかと考えます。

そこで今回は、Network Load Balancer(NLB)のアクセスログもCloudWatch Logsに転送することでログの一元的な集約・分析を可能にしていきます。

事前準備

VPC、サブネット、ルートテーブル、インターネットゲートウェイ、ネットワークACL、ACM、ドメインが作成済みであることを確認してください。

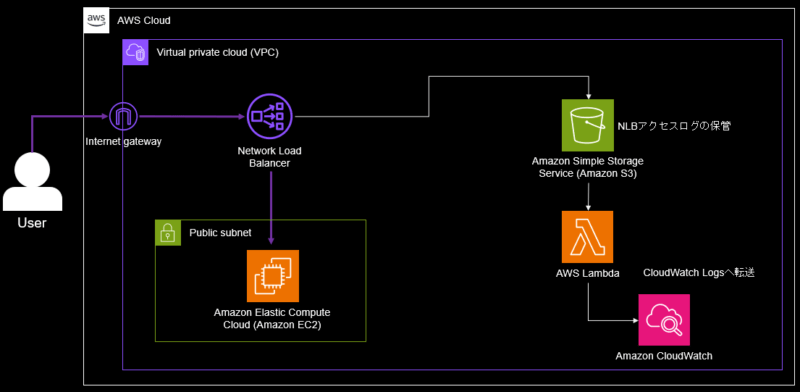

アーキテクチャー

- ユーザーは、Network Load Balancer(NLB)を経由でWebサイトにアクセスします。

- Network Load Balancer(NLB)のアクセスログをS3に保存します。

- アクセスログがS3に置かれることをトリガーとして、Lambdaが実行されてCloudWatch Logsに転送します。

本記事ではNetwork Load Balancer(NLB)等の作成をしていますが、ご自身の用途に併せてLambdaとS3のみ利用する等適宜調整してください。

完成したCloudFormationテンプレート

以下のテンプレートを使用して、デプロイします。

AWSTemplateFormatVersion: 2010-09-09

Description: NLB Accesslog push to CWlogs

Parameters:

ResourceName:

Type: String

VpcId:

Type: String

PublicSubnetA:

Type: String

PublicSubnetC:

Type: String

AcmArn:

Type: String

Resources:

# ------------------------------------------------------------#

# Network Load Balancer

# ------------------------------------------------------------#

LoadBalancer:

Type: AWS::ElasticLoadBalancingV2::LoadBalancer

Properties:

Name: !Sub ${ResourceName}-NLB

Subnets:

- !Ref PublicSubnetA

- !Ref PublicSubnetC

Scheme: internet-facing

Type: network

SecurityGroups:

- !Ref NLBSecurityGroup

LoadBalancerAttributes:

- Key: access_logs.s3.enabled

Value: true

- Key: access_logs.s3.bucket

Value: !Ref MyS3Bucket

LoadBalancerListener:

Type: AWS::ElasticLoadBalancingV2::Listener

Properties:

LoadBalancerArn: !Ref LoadBalancer

Port: 443

Protocol: TLS

DefaultActions:

- Type: forward

TargetGroupArn: !Ref TargetGroup

Certificates:

- CertificateArn: !Ref AcmArn

TargetGroup:

Type: AWS::ElasticLoadBalancingV2::TargetGroup

Properties:

Name: !Sub ${ResourceName}-targetgroup

VpcId: !Ref VpcId

Port: 80

Protocol: TCP

TargetType: instance

Targets:

- Id: !Ref EC2

Port: 80

NLBSecurityGroup:

Type: "AWS::EC2::SecurityGroup"

Properties:

VpcId: !Ref VpcId

SecurityGroupIngress:

- IpProtocol: tcp

FromPort: 443

ToPort: 443

CidrIp: "0.0.0.0/0"

GroupName: !Sub "${ResourceName}-nlb-sg"

GroupDescription: !Sub "${ResourceName}-nlb-sg"

Tags:

- Key: "Name"

Value: !Sub "${ResourceName}-nlb-sg"

# ------------------------------------------------------------#

# EC2

# ------------------------------------------------------------#

EC2:

Type: AWS::EC2::Instance

Properties:

ImageId: ami-0b193da66bc27147b

InstanceType: t2.micro

NetworkInterfaces:

- AssociatePublicIpAddress: "true"

DeviceIndex: "0"

SubnetId: !Ref PublicSubnetA

GroupSet:

- !Ref EC2SecurityGroup

UserData: !Base64 |

#!/bin/bash

yum update -y

yum install httpd -y

systemctl start httpd

systemctl enable httpd

touch /var/www/html/index.html

echo "Hello,World!" | tee -a /var/www/html/index.html

Tags:

- Key: Name

Value: !Sub "${ResourceName}-ec2"

EC2SecurityGroup:

Type: "AWS::EC2::SecurityGroup"

Properties:

VpcId: !Ref VpcId

SecurityGroupIngress:

- IpProtocol: tcp

FromPort: 80

ToPort: 80

SourceSecurityGroupId: !Ref NLBSecurityGroup

GroupName: !Sub "${ResourceName}-ec2-sg"

GroupDescription: !Sub "${ResourceName}-ec2-sg"

Tags:

- Key: "Name"

Value: !Sub "${ResourceName}-ec2-sg"

# ------------------------------------------------------------#

# IAM Policy IAM Role

# ------------------------------------------------------------#

LambdaPolicy:

Type: AWS::IAM::ManagedPolicy

Properties:

ManagedPolicyName: !Sub ${ResourceName}-lambda-policy

Description: IAM Managed Policy with S3 PUT and APIGateway GET Access

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: 'Allow'

Action:

- 's3:GetObject'

Resource:

- !Sub "arn:aws:s3:::${ResourceName}-${AWS::AccountId}-bucket/*"

LambdaRole:

Type: AWS::IAM::Role

Properties:

RoleName: !Sub ${ResourceName}-lambda-role

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action: sts:AssumeRole

Principal:

Service:

- lambda.amazonaws.com

ManagedPolicyArns:

- arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole

- !GetAtt LambdaPolicy.PolicyArn

# ------------------------------------------------------------#

# S3

# ------------------------------------------------------------#

MyS3Bucket:

Type: AWS::S3::Bucket

DeletionPolicy: Retain

Properties:

BucketName: !Sub ${ResourceName}-${AWS::AccountId}-bucket

NotificationConfiguration:

LambdaConfigurations:

- Event: 's3:ObjectCreated:*'

Function: !GetAtt PushtoCWlogsFunction.Arn

BucketPolicy:

Type: AWS::S3::BucketPolicy

Properties:

Bucket: !Ref MyS3Bucket

PolicyDocument:

Version: "2012-10-17"

Statement:

- Sid: AWSLogDeliveryAclCheck

Effect: Allow

Principal:

Service: delivery.logs.amazonaws.com

Action: s3:GetBucketAcl

Resource: !Sub "arn:aws:s3:::${ResourceName}-${AWS::AccountId}-bucket"

Condition:

StringEquals:

"aws:SourceAccount":

- !Sub ${AWS::AccountId}

ArnLike:

"aws:SourceArn":

- !Sub "arn:aws:logs:${AWS::Region}:${AWS::AccountId}:*"

- Sid: AWSLogDeliveryWrite

Effect: Allow

Principal:

Service: delivery.logs.amazonaws.com

Action: s3:PutObject

Resource: !Sub "arn:aws:s3:::${ResourceName}-${AWS::AccountId}-bucket/AWSLogs/${AWS::AccountId}/*"

Condition:

StringEquals:

"s3:x-amz-acl": "bucket-owner-full-control"

"aws:SourceAccount":

- !Sub ${AWS::AccountId}

ArnLike:

"aws:SourceArn":

- !Sub "arn:aws:logs:${AWS::Region}:${AWS::AccountId}:*"

# ------------------------------------------------------------#

# Lambda

# ------------------------------------------------------------#

PushtoCWlogsFunction:

Type: AWS::Lambda::Function

Properties:

FunctionName: !Sub ${ResourceName}-lambda-function

Role: !GetAtt LambdaRole.Arn

Runtime: python3.12

Handler: index.lambda_handler

Environment:

Variables:

LOGGROUPNAME: LGR-NLB-Access-Logs

Code:

ZipFile: !Sub |

import boto3

import time

import json

import io

import gzip

import os

s3_client = boto3.client('s3')

cwlogs_client = boto3.client('logs')

#ロググループの指定

LOG_GROUP_NAME = os.environ['LOGGROUPNAME']

#ログフォーマットの変数の指定

fields = ["type","version","time","elb","listener","client:port","destination:port","connection_time","tls_handshake_time",

"received_bytes","sent_bytes","incoming_tls_alert","chosen_cert_arn","chosen_cert_serial","tls_cipher","tls_protocol_version",

"tls_named_group","domain_name","alpn_fe_protocol","alpn_be_protocol","alpn_client_preference_list","tls_connection_creation_time"]

#s3の情報を取得

def parse_event(event):

return {

"bucket_name": event['Records'][0]['s3']['bucket']['name'],

"bucket_key": event['Records'][0]['s3']['object']['key']

}

#ログファイルをgzip解凍する

def convert_response(response):

body = response['Body'].read()

body_unzip = gzip.open(io.BytesIO(body))

return body_unzip.read().decode('utf-8')

#CWLogsにログを書き込む

def put_log(log, LOG_STREAM_NAME):

try:

response = cwlogs_client.put_log_events(

logGroupName = LOG_GROUP_NAME,

logStreamName = LOG_STREAM_NAME,

logEvents = [

{

'timestamp': int(time.time()) * 1000,

'message' : log

},

]

)

return response

except cwlogs_client.exceptions.ResourceNotFoundException as e:

cwlogs_client.create_log_stream(

logGroupName = LOG_GROUP_NAME, logStreamName = LOG_STREAM_NAME)

response = put_log(log, LOG_STREAM_NAME)

return response

except Exception as e:

print("error",e)

raise

#ログをjsonに変換する

def transform_json(log_list):

data = {}

json_data = []

for log_line in log_list:

field_item = log_line.split()

for field, value in zip(fields, field_item):

data[field] = value

json_data.append(json.dumps(data))

return json_data

def lambda_handler(event, context):

bucket_info = parse_event(event)

response = s3_client.get_object(

Bucket = bucket_info['bucket_name'], Key = bucket_info['bucket_key'])

LOG_STREAM_NAME = bucket_info['bucket_key'].split("_")[4]

log_text = convert_response(response)

log_list = log_text.splitlines()

log_data = transform_json(log_list)

for log in log_data:

response = put_log(log, LOG_STREAM_NAME)

return {

'statusCode': 200,

}

LambdaInvokePermission:

Type: 'AWS::Lambda::Permission'

Properties:

FunctionName: !GetAtt PushtoCWlogsFunction.Arn

Action: 'lambda:InvokeFunction'

Principal: s3.amazonaws.com

SourceAccount: !Ref 'AWS::AccountId'

SourceArn: !Sub 'arn:aws:s3:::${ResourceName}-${AWS::AccountId}-bucket'

PushtoCWlogsFunctionLogGroup:

Type: AWS::Logs::LogGroup

Properties:

LogGroupName: !Sub "/aws/lambda/${PushtoCWlogsFunction}"

NLBAccessLogGroup:

Type: AWS::Logs::LogGroup

Properties:

LogGroupName: LGR-NLB-Access-Logs

Lambdaのコード

Network Load Balancer(NLB)のアクセスログを転送する処理についてPythonファイルを用意しておりますので、ご自身の環境で検証の上ご利用ください。

今回では、CloudFormationテンプレート内にベタ書きしているので特に気にする必要はありません。

import boto3

import time

import json

import io

import gzip

import os

s3_client = boto3.client('s3')

cwlogs_client = boto3.client('logs')

#ロググループの指定

LOG_GROUP_NAME = os.environ['LOGGROUPNAME']

#ログフォーマットの変数の指定

fields = ["type","version","time","elb","listener","client:port","destination:port","connection_time","tls_handshake_time",

"received_bytes","sent_bytes","incoming_tls_alert","chosen_cert_arn","chosen_cert_serial","tls_cipher","tls_protocol_version",

"tls_named_group","domain_name","alpn_fe_protocol","alpn_be_protocol","alpn_client_preference_list","tls_connection_creation_time"]

#s3の情報を取得

def parse_event(event):

return {

"bucket_name": event['Records'][0]['s3']['bucket']['name'],

"bucket_key": event['Records'][0]['s3']['object']['key']

}

#ログファイルをgzip解凍する

def convert_response(response):

body = response['Body'].read()

body_unzip = gzip.open(io.BytesIO(body))

return body_unzip.read().decode('utf-8')

#CWLogsにログを書き込む

def put_log(log, LOG_STREAM_NAME):

try:

response = cwlogs_client.put_log_events(

logGroupName = LOG_GROUP_NAME,

logStreamName = LOG_STREAM_NAME,

logEvents = [

{

'timestamp': int(time.time()) * 1000,

'message' : log

},

]

)

return response

except cwlogs_client.exceptions.ResourceNotFoundException as e:

cwlogs_client.create_log_stream(

logGroupName = LOG_GROUP_NAME, logStreamName = LOG_STREAM_NAME)

response = put_log(log, LOG_STREAM_NAME)

return response

except Exception as e:

print("error",e)

raise

#ログをjsonに変換する

def transform_json(log_list):

data = {}

json_data = []

for log_line in log_list:

field_item = log_line.split()

for field, value in zip(fields, field_item):

data[field] = value

json_data.append(json.dumps(data))

return json_data

def lambda_handler(event, context):

bucket_info = parse_event(event)

response = s3_client.get_object(

Bucket = bucket_info['bucket_name'], Key = bucket_info['bucket_key'])

LOG_STREAM_NAME = bucket_info['bucket_key'].split("_")[4]

log_text = convert_response(response)

log_list = log_text.splitlines()

log_data = transform_json(log_list)

for log in log_data:

response = put_log(log, LOG_STREAM_NAME)

return {

'statusCode': 200,

}

動作検証

Network Load Balancer(NLB)のDNS名を登録する

カスタムドメインでアクセスできるように、Route53ホストゾーンにてNetwork Load Balancer(NLB)のDNS名のAliasレコードを追加してください。

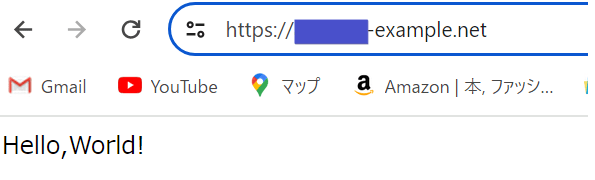

カスタムドメインでのアクセス

作成されたNetwork Load Balancer(NLB)に向けて、カスタムドメインでアクセスしてください。アクセスを試行することで、アクセスログがS3に保管されます。

そして、S3にオブジェクトがアップロードされたことをトリガーとしてLambdaが起動します。

CloudWatch Logsの確認

Network Load Balancer(NLB)のアクセスログが、JSON形式で格納されていることが分かります。これで、他のサービスと同様の方法でCloudWatch Logs内で分析できます。

最後に

いかがだったでしょうか。

既存のログ監視基盤を利用する等の理由で、AWSサービスのログをCloudWatch Logs内に集約・分析するといった事象が稀に発生します。その際はCloudWatchLogsに転送処理を実施し、引き継ぎCloudWatch Logsをご利用いただければと思います。

本記事が皆様のお役にたてば幸いです。

ではサウナラ~🔥